Red Hat Virtualization 4.4

Managing virtual machines in Red Hat Virtualization

Abstract

This document describes the installation, configuration, and administration of virtual machines in Red Hat Virtualization.

Chapter 1. Introduction

A virtual machine is a software implementation of a computer. The Red Hat Virtualization environment enables you to create virtual desktops and virtual servers.

Virtual machines consolidate computing tasks and workloads. In traditional computing environments, workloads usually run on individually administered and upgraded servers. Virtual machines reduce the amount of hardware and administration required to run the same computing tasks and workloads.

1.1. Audience

Most virtual machine tasks in Red Hat Virtualization can be performed in both the VM Portal and Administration Portal. However, the user interface differs between each portal, and some administrative tasks require access to the Administration Portal. Tasks that can only be performed in the Administration Portal will be described as such in this book. Which portal you use, and which tasks you can perform in each portal, is determined by your level of permissions. Virtual machine permissions are explained in Virtual Machines and Permissions.

The VM Portal’s user interface is described in the Introduction to the VM Portal.

The Administration Portal’s user interface is described in the Administration Guide.

The creation and management of virtual machines through the Red Hat Virtualization REST API is documented in the REST API Guide.

1.2. Supported Virtual Machine Operating Systems

See Certified Guest Operating Systems in Red Hat OpenStack Platform, Red Hat Virtualization and OpenShift Virtualization for a current list of supported operating systems.

For information on customizing the operating systems, see Configuring operating systems with osinfo.

1.3. Virtual Machine Performance Parameters

For information on the parameters that Red Hat Virtualization virtual machines can support, see Red Hat Enterprise Linux technology capabilities and limits and Virtualization limits for Red Hat Virtualization.

1.4. Installing Supporting Components on Client Machines

1.4.1. Installing Console Components

A console is a graphical window that allows you to view the start up screen, shut down screen, and desktop of a virtual machine, and to interact with that virtual machine in a similar way to a physical machine. In Red Hat Virtualization, the default application for opening a console to a virtual machine is Remote Viewer, which must be installed on the client machine prior to use.

1.4.1.1. Installing Remote Viewer on Red Hat Enterprise Linux

The Remote Viewer application provides users with a graphical console for connecting to virtual machines. Once installed, it is called automatically when attempting to open a SPICE session with a virtual machine. Alternatively, it can also be used as a standalone application. Remote Viewer is included in the virt-viewer package provided by the base Red Hat Enterprise Linux Workstation and Red Hat Enterprise Linux Server repositories.

Procedure

-

Install the

virt-viewerpackage:# dnf install virt-viewer

- Restart your browser for the changes to take effect.

You can now connect to your virtual machines using either the SPICE protocol or the VNC protocol.

1.4.1.2. Installing Remote Viewer on Windows

The Remote Viewer application provides users with a graphical console for connecting to virtual machines. Once installed, it is called automatically when attempting to open a SPICE session with a virtual machine. Alternatively, it can also be used as a standalone application.

Installing Remote Viewer on Windows

-

Open a web browser and download one of the following installers according to the architecture of your system.

-

Virt Viewer for 32-bit Windows:

https://your-manager-fqdn/ovirt-engine/services/files/spice/virt-viewer-x86.msi -

Virt Viewer for 64-bit Windows:

https://your-manager-fqdn/ovirt-engine/services/files/spice/virt-viewer-x64.msi

-

- Open the folder where the file was saved.

- Double-click the file.

- Click Run if prompted by a security warning.

- Click Yes if prompted by User Account Control.

Remote Viewer is installed and can be accessed via Remote Viewer in the VirtViewer folder of All Programs in the start menu.

1.4.1.3. Installing usbdk on Windows

usbdk is a driver that enables remote-viewer exclusive access to USB devices on Windows operating systems. Installing usbdk requires Administrator privileges. Note that the previously supported USB Clerk option has been deprecated and is no longer supported.

Installing usbdk on Windows

-

Open a web browser and download one of the following installers according to the architecture of your system.

-

usbdkfor 32-bit Windows:https://[your manager’s address]/ovirt-engine/services/files/spice/usbdk-x86.msi -

usbdkfor 64-bit Windows:https://[your manager’s address]/ovirt-engine/services/files/spice/usbdk-x64.msi

-

- Open the folder where the file was saved.

- Double-click the file.

- Click Run if prompted by a security warning.

- Click Yes if prompted by User Account Control.

Chapter 2. Installing Red Hat Enterprise Linux Virtual Machines

Installing a Red Hat Enterprise Linux virtual machine involves the following key steps:

- Create a virtual machine. You must add a virtual disk for storage, and a network interface to connect the virtual machine to the network.

-

Start the virtual machine and install an operating system. See your operating system’s documentation for instructions.

- Red Hat Enterprise Linux 6: Installing Red Hat Enterprise Linux 6.9 for all architectures

- Red Hat Enterprise Linux 7: Installing Red Hat Enterprise Linux 7 on all architectures

- Red Hat Enterprise Linux Atomic Host 7: Red Hat Enterprise Linux Atomic Host 7 Installation and Configuration Guide

- Red Hat Enterprise Linux 8: Installing Red Hat Enterprise Linux 8 using the graphical user interface

- Enable the required repositories for your operating system.

- Install guest agents and drivers for additional virtual machine functionality.

2.1. Creating a virtual machine

When creating a new virtual machine, you specify its settings. You can edit some of these settings later, including the chipset and BIOS type. For more information, see UEFI and the Q35 chipset in the Administration Guide. .Prerequisites

Before you can use this virtual machine, you must:

-

Install an operating system

- Use a pre-installed image by Creating a Cloned Virtual Machine Based on a Template

- Use a pre-installed image from an attached pre-installed Disk

- Install an operating system through the PXE boot menu or from an ISO file

- Register with the Content Delivery Network

Procedure

- Click → .

- Click New. This opens the New Virtual Machine window.

-

Select an Operating System from the drop-down list.

If you selected Red Hat Enterprise Linux CoreOS as the operating system, you may need to set the initialization method by configuring Ignition settings in the Advanced Options

Initial Runtab. See Configuring Ignition. - Enter a Name for the virtual machine.

-

Add storage to the virtual machine: under Instance Images, click Attach or Create to select or create a virtual disk .

-

Click Attach and select an existing virtual disk.

or

- Click Create and enter a Size(GB) and Alias for a new virtual disk. You can accept the default settings for all other fields, or change them if required. See Explanation of settings in the New Virtual Disk and Edit Virtual Disk windows for more details on the fields for all disk types.

-

- Connect the virtual machine to the network. Add a network interface by selecting a vNIC profile from the nic1 drop-down list at the bottom of the General tab.

- Specify the virtual machine’s Memory Size on the System tab.

- In the Boot Options tab, choose the First Device that the virtual machine will use to boot.

- You can accept the default settings for all other fields, or change them if required. For more details on all fields in the New Virtual Machine window, see Explanation of settings in the New Virtual Machine and Edit Virtual Machine Windows.

- Click OK.

The new virtual machine is created and displays in the list of virtual machines with a status of Down.

Configuring Ignition

Ignition is the utility that is used by Red Hat Enterprise Linux CoreOS to manipulate disks during initial configuration. It completes common disk tasks, including partitioning disks, formatting partitions, writing files, and configuring users. On first boot, Ignition reads its configuration from the installation media or the location that you specify and applies the configuration to the machines.

Once Ignition has been configured as the initialization method, it cannot be reversed or re-configured.

-

In the

Add Virtual MachineorEdit Virtual Machinescreen, click Show Advanced Options. -

In the

Initial Runtab, select the Ignition 2.3.0 option and enter the VM Hostname. - Expand the Authorization option, enter a hashed (SHA-512) password, and enter the password again to verify.

- If you are using SSH keys for authorization, enter them in the space provided.

-

You can also enter a custom Ignition script in JSON format in the Ignition Script field. This script will run on the virtual machine when it starts. The scripts you enter in this field are custom JSON sections that are added to those produced by the Manager, and allow you to use custom Ignition instructions.

If the Red Hat Enterprise Linux CoreOS image you are using contains an Ignition version different than 2.3.0, you need to use a script in the Ignition Script field to enforce the Ignition version included in your Red Hat Enterprise Linux CoreOS image.

When you use an Ignition script, the script instructions take precedence over and override any conflicting Ignition settings you configured in the UI.

2.2. Starting the Virtual Machine

2.2.1. Starting a Virtual Machine

Procedure

-

Click → and select a virtual machine with a status of

Down. - Click Run.

The Status of the virtual machine changes to Up, and the operating system installation begins. Open a console to the virtual machine if one does not open automatically.

A virtual machine will not start on a host with an overloaded CPU. By default, a host’s CPU is considered overloaded if it has a load of more than 80% for 5 minutes, but these values can be changed using scheduling policies. See Scheduling Policies in the Administration Guide for more information.

Troubleshooting

Scenario — the virtual machine fails to boot with the following error message:

Boot failed: not a bootable disk - No Bootable device

Possible solutions to this problem:

- Make sure that hard disk is selected in the boot sequence, and the disk that the virtual machine is booting from must be set as Bootable.

- Create a Cloned Virtual Machine Based on a Template.

- Create a new virtual machine with a local boot disk managed by RHV that contains the OS and application binaries.

- Install the OS by booting from the Network (PXE) boot option.

Scenario — the virtual machine on IBM POWER9 fails to boot with the following error message:

qemu-kvm: Requested count cache flush assist capability level not supported by kvm, try appending -machine cap-ccf-assist=off

Default risk level protections can prevent VMs from starting on IBM POWER9. To resolve this issue:

-

Create or edit the

/var/lib/obmc/cfam_overrideson the BMC. -

Set the firmware risk level to

0:# Control speculative execution mode 0 0x283a 0x00000000 # bits 28:31 are used for init level -- in this case 0 Kernel and User protection (safest, default) 0 0x283F 0x20000000 # Indicate override register is valid

- Reboot the host system for the changes to take affect.

Overriding the risk level can cause unexpected behavior when running virtual machines.

2.2.2. Opening a console to a virtual machine

Use Remote Viewer to connect to a virtual machine.

To allow other users to connect to the VM, make sure you shutdown and restart the virtual machine when you are finished using the console. Alternatively, the administrator can Disable strict user checking to eliminate the need for reboot between users. See Virtual Machine Console Settings Explained for more information.

Procedure

- Install Remote Viewer if it is not already installed. See Installing Console Components.

- Click → and select a virtual machine.

-

Click Console. By default, the browser prompts you to download a file named

console.vv. When you click to open the file, a console window opens for the virtual machine. You can configure your browser to automatically open these files, such that clicking Console simply opens the console.

console.vv expires after 120 seconds. If more than 120 seconds elapse between the time the file is downloaded and the time that you open the file, click Console again.

2.2.3. Opening a Serial Console to a Virtual Machine

You can access a virtual machine’s serial console from the command line instead of opening a console from the Administration Portal or the VM Portal. The serial console is emulated through VirtIO channels, using SSH and key pairs. The Manager acts as a proxy for the connection, provides information about virtual machine placement, and stores the authentication keys. You can add public keys for each user from either the Administration Portal or the VM Portal. You can access serial consoles for only those virtual machines for which you have appropriate permissions.

To access the serial console of a virtual machine, the user must have UserVmManager, SuperUser, or UserInstanceManager permission on that virtual machine. These permissions must be explicitly defined for each user. It is not enough to assign these permissions to Everyone.

The serial console is accessed through TCP port 2222 on the Manager. This port is opened during engine-setup on new installations. To change the port, see ovirt-vmconsole/README.md.

You must configure the following firewall rules to allow a serial console:

- Rule «M3» for the Manager firewall

- Rule «H2» for the host firewall

The serial console relies on the ovirt-vmconsole package and the ovirt-vmconsole-proxy on the Manager and the ovirt-vmconsole package and the ovirt-vmconsole-host package on the hosts.

These packages are installed by default on new installations. To install the packages on existing installations, reinstall the hosts.

Enabling a Virtual Machine’s Serial Console

-

On the virtual machine whose serial console you are accessing, add the following lines to /etc/default/grub:

GRUB_CMDLINE_LINUX_DEFAULT="console=tty0 console=ttyS0,115200n8" GRUB_TERMINAL="console serial" GRUB_SERIAL_COMMAND="serial --speed=115200 --unit=0 --word=8 --parity=no --stop=1"

GRUB_CMDLINE_LINUX_DEFAULTapplies this configuration only to the default menu entry. UseGRUB_CMDLINE_LINUXto apply the configuration to all the menu entries.If these lines already exist in /etc/default/grub, update them. Do not duplicate them.

-

Rebuild /boot/grub2/grub.cfg:

-

BIOS-based machines:

# grub2-mkconfig -o /boot/grub2/grub.cfg

-

UEFI-based machines:

# grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg

See GRUB 2 over a Serial Console in the Red Hat Enterprise Linux 7 System Administrator’s Guide for details.

-

-

On the client machine from which you are accessing the virtual machine serial console, generate an SSH key pair. The Manager supports standard SSH key types, for example, an RSA key:

# ssh-keygen -t rsa -b 2048 -f .ssh/serialconsolekey

This command generates a public key and a private key.

- In the Administration Portal or the VM Portal, click the name of the signed-in user on the header bar and click Options. This opens the Edit Options window.

- In the User’s Public Key text field, paste the public key of the client machine that will be used to access the serial console.

- Click → and select a virtual machine.

- Click Edit.

- In the Console tab of the Edit Virtual Machine window, select the Enable VirtIO serial console check box.

Connecting to a Virtual Machine’s Serial Console

On the client machine, connect to the virtual machine’s serial console:

-

If a single virtual machine is available, this command connects the user to that virtual machine:

# ssh -t -p 2222 ovirt-vmconsole@Manager_FQDN -i .ssh/serialconsolekey Red Hat Enterprise Linux Server release 6.7 (Santiago) Kernel 2.6.32-573.3.1.el6.x86_64 on an x86_64 USER login:

-

If more than one virtual machine is available, this command lists the available virtual machines and their IDs:

# ssh -t -p 2222 ovirt-vmconsole@Manager_FQDN -i .ssh/serialconsolekey list 1. vm1 [vmid1] 2. vm2 [vmid2] 3. vm3 [vmid3] > 2 Red Hat Enterprise Linux Server release 6.7 (Santiago) Kernel 2.6.32-573.3.1.el6.x86_64 on an x86_64 USER login:

Enter the number of the machine to which you want to connect, and press

Enter. -

Alternatively, connect directly to a virtual machine using its unique identifier or its name:

# ssh -t -p 2222 ovirt-vmconsole@Manager_FQDN connect --vm-id vmid1

# ssh -t -p 2222 ovirt-vmconsole@Manager_FQDN connect --vm-name vm1

Disconnecting from a Virtual Machine’s Serial Console

Press any key followed by ~ . to close a serial console session.

If the serial console session is disconnected abnormally, a TCP timeout occurs. You will be unable to reconnect to the virtual machine’s serial console until the timeout period expires.

2.2.4. Automatically Connecting to a Virtual Machine

Once you have logged in, you can automatically connect to a single running virtual machine. This can be configured in the VM Portal.

Procedure

- In the Virtual Machines page, click the name of the virtual machine to go to the details view.

- Click the pencil icon beside Console and set Connect automatically to ON.

The next time you log into the VM Portal, if you have only one running virtual machine, you will automatically connect to that machine.

2.3. Enabling the Required Repositories

To install packages signed by Red Hat you must register the target system to the Content Delivery Network. Then, use an entitlement from your subscription pool and enable the required repositories.

Enabling the Required Repositories Using Subscription Manager

-

Register your system with the Content Delivery Network, entering your Customer Portal user name and password when prompted:

# subscription-manager register

-

Locate the relevant subscription pools and note down the pool identifiers:

# subscription-manager list --available

-

Use the pool identifiers to attach the required subscriptions:

# subscription-manager attach --pool=pool_id -

When a system is attached to a subscription pool with multiple repositories, only the main repository is enabled by default. Others are available, but disabled. Enable any additional repositories:

# subscription-manager repos --enable=repository -

Ensure that all packages currently installed are up to date:

# dnf upgrade --nobest

2.4. Installing Guest Agents and Drivers

2.4.1. Red Hat Virtualization Guest agents, tools, and drivers

The Red Hat Virtualization guest agents, tools, and drivers provide additional functionality for virtual machines, such as gracefully shutting down or rebooting virtual machines from the VM Portal and Administration Portal. The tools and agents also provide information for virtual machines, including:

- Resource usage

- IP addresses

The guest agents, tools and drivers are distributed as an ISO file that you can attach to virtual machines. This ISO file is packaged as an RPM file that you can install and upgrade from the Manager machine.

You need to install the guest agents and drivers on a virtual machine to enable this functionality for that machine.

Table 2.1. Red Hat Virtualization Guest drivers

| Driver | Description | Works on |

|---|---|---|

|

|

Paravirtualized network driver provides enhanced performance over emulated devices like rtl. |

Server and Desktop. |

|

|

Paravirtualized HDD driver offers increased I/O performance over emulated devices like IDE by optimizing the coordination and communication between the virtual machine and the hypervisor. The driver complements the software implementation of the virtio-device used by the host to play the role of a hardware device. |

Server and Desktop. |

|

|

Paravirtualized iSCSI HDD driver offers similar functionality to the virtio-block device, with some additional enhancements. In particular, this driver supports adding hundreds of devices, and names devices using the standard SCSI device naming scheme. |

Server and Desktop. |

|

|

Virtio-serial provides support for multiple serial ports. The improved performance is used for fast communication between the virtual machine and the host that avoids network complications. This fast communication is required for the guest agents and for other features such as clipboard copy-paste between the virtual machine and the host and logging. |

Server and Desktop. |

|

|

Virtio-balloon is used to control the amount of memory a virtual machine actually accesses. It offers improved memory overcommitment. |

Server and Desktop. |

|

|

A paravirtualized display driver reduces CPU usage on the host and provides better performance through reduced network bandwidth on most workloads. |

Server and Desktop. |

Table 2.2. Red Hat Virtualization Guest agents and tools

| Guest agent/tool | Description | Works on |

|---|---|---|

|

|

Used instead of |

Server and Desktop. |

|

|

The SPICE agent supports multiple monitors and is responsible for client-mouse-mode support to provide a better user experience and improved responsiveness than the QEMU emulation. Cursor capture is not needed in client-mouse-mode. The SPICE agent reduces bandwidth usage when used over a wide area network by reducing the display level, including color depth, disabling wallpaper, font smoothing, and animation. The SPICE agent enables clipboard support allowing cut and paste operations for both text and images between client and virtual machine, and automatic guest display setting according to client-side settings. On Windows-based virtual machines, the SPICE agent consists of vdservice and vdagent. |

Server and Desktop. |

2.4.2. Installing the Guest Agents and Drivers on Red Hat Enterprise Linux

The Red Hat Virtualization guest agents and drivers are provided by the Red Hat Virtualization Agent repository.

Red Hat Enterprise Linux 8 virtual machines use the qemu-guest-agent service, which is installed and enabled by default, instead of the ovirt-guest-agent service. If you need to manually install the guest agent on RHEL 8, follow the procedure below.

Procedure

- Log in to the Red Hat Enterprise Linux virtual machine.

-

Enable the Red Hat Virtualization Agent repository:

-

For Red Hat Enterprise Linux 6

# subscription-manager repos --enable=rhel-6-server-rhv-4-agent-rpms

-

For Red Hat Enterprise Linux 7

# subscription-manager repos --enable=rhel-7-server-rh-common-rpms

-

For Red Hat Enterprise Linux 8

# subscription-manager repos --enable=rhel-8-for-x86_64-appstream-rpms

-

-

Install the guest agent and dependencies:

-

For Red Hat Enterprise Linux 6 or 7, install the ovirt guest agent:

# yum install ovirt-guest-agent-common

-

For Red Hat Enterprise Linux 8 and 9, install the qemu guest agent:

# yum install qemu-guest-agent

-

-

Start and enable the

ovirt-guest-agentservice:-

For Red Hat Enterprise Linux 6

# service ovirt-guest-agent start # chkconfig ovirt-guest-agent on

-

For Red Hat Enterprise Linux 7

# systemctl start ovirt-guest-agent # systemctl enable ovirt-guest-agent

-

-

Start and enable the

qemu-guest-agentservice:-

For Red Hat Enterprise Linux 6

# service qemu-ga start # chkconfig qemu-ga on

-

For Red Hat Enterprise Linux 7, 8 or 9

# systemctl start qemu-guest-agent # systemctl enable qemu-guest-agent

-

The guest agent now passes usage information to the Red Hat Virtualization Manager. You can configure the oVirt guest agent in the /etc/ovirt-guest-agent.conf file.

Chapter 3. Installing Windows virtual machines

Installing a Windows virtual machine involves the following key steps:

- Create a blank virtual machine on which to install an operating system.

- Add a virtual disk for storage.

- Add a network interface to connect the virtual machine to the network.

- Attach the Windows guest tools CD to the virtual machine so that VirtIO-optimized device drivers can be installed during the operating system installation.

- Install a Windows operating system on the virtual machine. See your operating system’s documentation for instructions.

- During the installation, install guest agents and drivers for additional virtual machine functionality.

When all of these steps are complete, the new virtual machine is functional and ready to perform tasks.

3.1. Creating a virtual machine

When creating a new virtual machine, you specify its settings. You can edit some of these settings later, including the chipset and BIOS type. For more information, see UEFI and the Q35 chipset in the Administration Guide. .Prerequisites

Before you can use this virtual machine, you must:

- Install an operating system

- Install a VirtIO-optimized disk and network drivers

Procedure

-

You can change the default virtual machine name length with the

engine-configtool. Run the following command on the Manager machine:# engine-config --set MaxVmNameLength=integer - Click → .

- Click New. This opens the New Virtual Machine window.

- Select an Operating System from the drop-down list.

- Enter a Name for the virtual machine.

-

Add storage to the virtual machine: under Instance Images, click Attach or Create to select or create a virtual disk .

-

Click Attach and select an existing virtual disk.

or

- Click Create and enter a Size(GB) and Alias for a new virtual disk. You can accept the default settings for all other fields, or change them if required. See Explanation of settings in the New Virtual Disk and Edit Virtual Disk windows for more details on the fields for all disk types.

-

- Connect the virtual machine to the network. Add a network interface by selecting a vNIC profile from the nic1 drop-down list at the bottom of the General tab.

- Specify the virtual machine’s Memory Size on the System tab.

- In the Boot Options tab, choose the First Device that the virtual machine will use to boot.

- You can accept the default settings for all other fields, or change them if required. For more details on all fields in the New Virtual Machine window, see Explanation of settings in the New Virtual Machine and Edit Virtual Machine Windows.

- Click OK.

The new virtual machine is created and displays in the list of virtual machines with a status of Down.

3.2. Starting the virtual machine using Run Once

3.2.1. Installing Windows on VirtIO-optimized hardware

Install VirtIO-optimized disk and network device drivers during your Windows installation by attaching the virtio-win_version.iso file to your virtual machine. These drivers provide a performance improvement over emulated device drivers.

Use the Run Once option to attach the virtio-win_version.iso file in a one-off boot different from the Boot Options defined in the New Virtual Machine window.

Prerequisites

The following items have been added to the virtual machine:

- a Red Hat VirtIO network interface.

- a disk that uses the VirtIO interface. This disk can be on a

You can upload virtio-win_version.iso to a data storage domain.

Red Hat recommends uploading ISO images to the data domain with the Administration Portal or with the REST API. For more information, see Uploading Images to a Data Storage Domain in the Administration Guide.

If necessary, you can upload the virtio-win ISO file to an ISO storage domain that is hosted on the Manager. The ISO storage domain type is deprecated. For more information, see Uploading images to an ISO domain in the Administration Guide.

Procedure

To install the virtio-win drivers when installing Windows, complete the following steps:

- Click → and select a virtual machine.

- Click → .

- Expand the Boot Options menu.

- Select the Attach CD check box, and select a Windows ISO from the drop-down list.

- Select the Attach Windows guest tools CD check box.

- Move CD-ROM to the top of the Boot Sequence field.

- Configure other Run Once options as required. See Virtual Machine Run Once settings explained for more details.

-

Click OK. The status of the virtual machine changes to Up, and the operating system installation begins.

Open a console to the virtual machine if one does not open automatically during the Windows installation.

- When prompted to select a drive onto which you want to install Windows, click Load driver and OK.

- Under Select the driver to install, select the appropriate driver for the version of Windows. For example, for Windows Server 2019, select Red Hat VirtIO SCSI controller (E:amd642k19viostor.inf)

- Click Next.

The rest of the installation proceeds as normal.

3.2.2. Opening a console to a virtual machine

Use Remote Viewer to connect to a virtual machine.

To allow other users to connect to the VM, make sure you shutdown and restart the virtual machine when you are finished using the console. Alternatively, the administrator can Disable strict user checking to eliminate the need for reboot between users. See Virtual Machine Console Settings Explained for more information.

Procedure

- Install Remote Viewer if it is not already installed. See Installing Console Components.

- Click → and select a virtual machine.

-

Click Console. By default, the browser prompts you to download a file named

console.vv. When you click to open the file, a console window opens for the virtual machine. You can configure your browser to automatically open these files, such that clicking Console simply opens the console.

console.vv expires after 120 seconds. If more than 120 seconds elapse between the time the file is downloaded and the time that you open the file, click Console again.

3.3. Installing guest agents and drivers

3.3.1. Red Hat Virtualization Guest agents, tools, and drivers

The Red Hat Virtualization guest agents, tools, and drivers provide additional functionality for virtual machines, such as gracefully shutting down or rebooting virtual machines from the VM Portal and Administration Portal. The tools and agents also provide information for virtual machines, including:

- Resource usage

- IP addresses

The guest agents, tools and drivers are distributed as an ISO file that you can attach to virtual machines. This ISO file is packaged as an RPM file that you can install and upgrade from the Manager machine.

You need to install the guest agents and drivers on a virtual machine to enable this functionality for that machine.

Table 3.1. Red Hat Virtualization Guest drivers

| Driver | Description | Works on |

|---|---|---|

|

|

Paravirtualized network driver provides enhanced performance over emulated devices like rtl. |

Server and Desktop. |

|

|

Paravirtualized HDD driver offers increased I/O performance over emulated devices like IDE by optimizing the coordination and communication between the virtual machine and the hypervisor. The driver complements the software implementation of the virtio-device used by the host to play the role of a hardware device. |

Server and Desktop. |

|

|

Paravirtualized iSCSI HDD driver offers similar functionality to the virtio-block device, with some additional enhancements. In particular, this driver supports adding hundreds of devices, and names devices using the standard SCSI device naming scheme. |

Server and Desktop. |

|

|

Virtio-serial provides support for multiple serial ports. The improved performance is used for fast communication between the virtual machine and the host that avoids network complications. This fast communication is required for the guest agents and for other features such as clipboard copy-paste between the virtual machine and the host and logging. |

Server and Desktop. |

|

|

Virtio-balloon is used to control the amount of memory a virtual machine actually accesses. It offers improved memory overcommitment. |

Server and Desktop. |

|

|

A paravirtualized display driver reduces CPU usage on the host and provides better performance through reduced network bandwidth on most workloads. |

Server and Desktop. |

Table 3.2. Red Hat Virtualization Guest agents and tools

| Guest agent/tool | Description | Works on |

|---|---|---|

|

|

Used instead of |

Server and Desktop. |

|

|

The SPICE agent supports multiple monitors and is responsible for client-mouse-mode support to provide a better user experience and improved responsiveness than the QEMU emulation. Cursor capture is not needed in client-mouse-mode. The SPICE agent reduces bandwidth usage when used over a wide area network by reducing the display level, including color depth, disabling wallpaper, font smoothing, and animation. The SPICE agent enables clipboard support allowing cut and paste operations for both text and images between client and virtual machine, and automatic guest display setting according to client-side settings. On Windows-based virtual machines, the SPICE agent consists of vdservice and vdagent. |

Server and Desktop. |

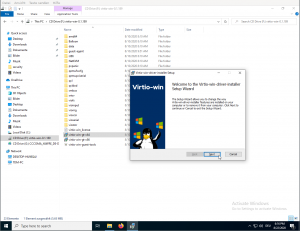

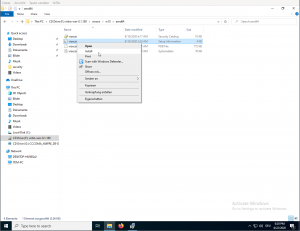

3.3.2. Installing the guest agents, tools, and drivers on Windows

Procedure

To install the guest agents, tools, and drivers on a Windows virtual machine, complete the following steps:

-

On the Manager machine, install the

virtio-winpackage:# dnf install virtio-win*

After you install the package, the ISO file is located in

/usr/share/virtio-win/virtio-win_version.isoon the Manager machine. -

Upload

virtio-win_version.isoto a data storage domain. See Uploading Images to a Data Storage Domain in the Administration Guide for details. -

In the Administration or VM Portal, if the virtual machine is running, use the Change CD button to attach the

virtio-win_version.isofile to each of your virtual machines. If the virtual machine is powered off, click the Run Once button and attach the ISO as a CD. - Log in to the virtual machine.

-

Select the CD Drive containing the

virtio-win_version.isofile. You can complete the installation with either the GUI or the command line. -

Run the installer.

- To install with the GUI, complete the following steps

-

-

Double-click

virtio-win-guest-tools.exe. - Click Next at the welcome screen.

- Follow the prompts in the installation wizard.

- When installation is complete, select Yes, I want to restart my computer now and click Finish to apply the changes.

-

Double-click

- To install silently with the command line, complete the following steps

-

- Open a command prompt with Administrator privileges.

-

Enter the

msiexeccommand:D: msiexec /i "PATH_TO_MSI" /qn [/l*v "PATH_TO_LOG"][/norestart] ADDLOCAL=ALL

Other possible values for ADDLOCAL are listed below.

For example, to run the installation when

virtio-win-gt-x64.msiis on theD:drive, without saving the log, and then immediately restart the virtual machine, enter the following command:D: msiexec /i "virtio-win-gt-x64.msi" /qn ADDLOCAL=ALL

After installation completes, the guest agents and drivers pass usage information to the Red Hat Virtualization Manager and enable you to access USB devices and other functionality.

3.3.3. Values for ADDLOCAL to customize virtio-win command-line installation

When installing virtio-win-gt-x64.msi or virtio-win-gt-x32.msi with the command line, you can install any one driver, or any combination of drivers.

You can also install specific agents, but you must also install each agent’s corresponding drivers.

The ADDLOCAL parameter of the msiexec command enables you to specify which drivers or agents to install. ADDLOCAL=ALL installs all drivers and agents. Other values are listed in the following tables.

Table 3.3. Possible values for ADDLOCAL to install drivers

| Value for ADDLOCAL | Driver Name | Description |

|---|---|---|

|

|

|

Paravirtualized network driver provides enhanced performance over emulated devices like rtl. |

|

|

|

Controls the amount of memory a virtual machine actually accesses. It offers improved memory overcommitment. |

|

|

|

QEMU pvpanic device driver. |

|

|

|

QEMU FWCfg device driver. |

|

|

|

QEMU PCI serial device driver. |

|

|

|

A paravirtualized display driver reduces CPU usage on the host and provides better performance through reduced network bandwidth on most workloads. |

|

|

|

VirtIO Input Driver. |

|

|

|

VirtIO RNG device driver. |

|

|

|

VirtIO SCSI pass-through controller. |

|

|

|

VirtIO Serial device driver. |

|

|

|

VirtIO Block driver. |

Table 3.4. Possible values for ADDLOCAL to install agents and required corresponding drivers

| Agent | Description | Corresponding driver(s) | Value for ADDLOCAL |

|---|---|---|---|

|

Spice Agent |

Supports multiple monitors, responsible for client-mouse-mode support, reduces bandwidth usage, enables clipboard support between client and virtual machine, provide a better user experience and improved responsiveness. |

|

|

Examples

The following command installs only the VirtIO SCSI pass-through controller, the VirtIO Serial device driver, and the VirtIO Block driver:

D: msiexec /i "virtio-win-gt-x64.msi" /qn ADDLOCAL=`FE_vioscsi_driver,FE_vioserial_driver,FE_viostor_driver

The following command installs only the Spice Agent and its required corresponding drivers:

D: msiexec /i "virtio-win-gt-x64.msi" /qn ADDLOCAL = FE_spice_Agent,FE_vioserial_driver,FE_spice_driver

The Microsoft Developer website:

- Windows Installer

- Command-Line Options for the Windows installer

- Property Reference for the Windows installer

Chapter 4. Additional Configuration

4.1. Configuring Operating Systems with osinfo

Red Hat Virtualization stores operating system configurations for virtual machines in /etc/ovirt-engine/osinfo.conf.d/00-defaults.properties. This file contains default values such as os.other.devices.display.protocols.value = spice/qxl,vnc/vga,vnc/qxl.

There are only a limited number of scenarios in which you would change these values:

- Adding an operating system that does not appear in the list of supported guest operating systems

-

Adding a product key (for example,

os.windows_10x64.productKey.value =) -

Configuring the

syspreppath for a Windows virtual machine (for example,os.windows_10x64.sysprepPath.value = ${ENGINE_USR}/conf/sysprep/sysprep.w10x64)

Do not edit the actual 00-defaults.properties file. Changes will be overwritten if you upgrade or restore the Manager.

Do not change values that come directly from the operating system or the Manager, such as maximum memory size.

To change the operating system configurations, create an override file in /etc/ovirt-engine/osinfo.conf.d/. The file name must begin with a value greater than 00, so that the file appears after /etc/ovirt-engine/osinfo.conf.d/00-defaults.properties, and ends with the extension, .properties.

For example, 10-productkeys.properties overrides the default file, 00-defaults.properties. The last file in the file list has precedence over earlier files.

4.2. Configuring Single Sign-On for Virtual Machines

Configuring single sign-on, also known as password delegation, allows you to automatically log in to a virtual machine using the credentials you use to log in to the VM Portal. Single sign-on can be used on both Red Hat Enterprise Linux and Windows virtual machines.

Single sign-on is not supported for virtual machines running Red Hat Enterprise Linux 8.0.

If single sign-on to the VM Portal is enabled, single sign-on to virtual machines will not be possible. With single sign-on to the VM Portal enabled, the VM Portal does not need to accept a password, thus the password cannot be delegated to sign in to virtual machines.

4.2.1. Configuring Single Sign-On for Red Hat Enterprise Linux Virtual Machines Using IPA (IdM)

To configure single sign-on for Red Hat Enterprise Linux virtual machines using GNOME and KDE graphical desktop environments and IPA (IdM) servers, you must install the ovirt-guest-agent package on the virtual machine and install the packages associated with your window manager.

The following procedure assumes that you have a working IPA configuration and that the IPA domain is already joined to the Manager. You must also ensure that the clocks on the Manager, the virtual machine and the system on which IPA (IdM) is hosted are synchronized using NTP.

Single sign-on with IPA (IdM) is deprecated for virtual machines running Red Hat Enterprise Linux version 7 or earlier and unsupported for virtual machines running Red Hat Enterprise Linux 8 or Windows operating systems.

Configuring Single Sign-On for Red Hat Enterprise Linux Virtual Machines

- Log in to the Red Hat Enterprise Linux virtual machine.

-

Enable the repository:

-

For Red Hat Enterprise Linux 6:

# subscription-manager repos --enable=rhel-6-server-rhv-4-agent-rpms

-

For Red Hat Enterprise Linux 7:

# subscription-manager repos --enable=rhel-7-server-rh-common-rpms

-

-

Download and install the guest agent, single sign-on, and IPA packages:

# yum install ovirt-guest-agent-common ovirt-guest-agent-pam-module ovirt-guest-agent-gdm-plugin ipa-client

-

Run the following command and follow the prompts to configure

ipa-clientand join the virtual machine to the domain:# ipa-client-install --permit --mkhomedir

In environments that use DNS obfuscation, this command should be:

# ipa-client-install --domain=FQDN --server=FQDN

-

For Red Hat Enterprise Linux 7.2 and later:

# authconfig --enablenis --update

Red Hat Enterprise Linux 7.2 has a new version of the System Security Services Daemon (SSSD), which introduces configuration that is incompatible with the Red Hat Virtualization Manager guest agent single sign-on implementation. This command ensures that single sign-on works.

-

Fetch the details of an IPA user:

# getent passwd ipa-user -

Record the IPA user’s UID and GID:

ipa-user:*:936600010:936600001::/home/ipa-user:/bin/sh

-

Create a home directory for the IPA user:

# mkdir /home/ipa-user -

Assign ownership of the directory to the IPA user:

# chown 936600010:936600001 /home/ipa-user

Log in to the VM Portal using the user name and password of a user configured to use single sign-on and connect to the console of the virtual machine. You will be logged in automatically.

4.2.2. Configuring single sign-on for Windows virtual machines

To configure single sign-on for Windows virtual machines, the Windows guest agent must be installed on the guest virtual machine. The virtio-win ISO image provides this agent. If the virtio-win_version.iso image is not available in your storage domain, contact your system administrator.

Procedure

- Select the Windows virtual machine. Ensure the machine is powered up.

- On the virtual machine, locate the CD drive and open the CD.

-

Launch

virtio-win-guest-tools. - Click Options

- Select Install oVirt Guest Agent.

- Click OK.

- Click Install.

- When the installation completes, you are prompted to restart the machine to apply the changes.

Log in to the VM Portal using the user name and password of a user configured to use single sign-on and connect to the console of the virtual machine. You will be logged in automatically.

4.2.3. Disabling Single Sign-on for Virtual Machines

The following procedure explains how to disable single sign-on for a virtual machine.

Disabling Single Sign-On for Virtual Machines

- Select a virtual machine and click Edit.

- Click the Console tab.

- Select the Disable Single Sign On check box.

- Click OK.

4.3. Configuring USB Devices

A virtual machine connected with the SPICE protocol can be configured to connect directly to USB devices.

The USB device will only be redirected if the virtual machine is active, in focus and is run from the VM Portal. USB redirection can be manually enabled each time a device is plugged in or set to automatically redirect to active virtual machines in the Console Options window.

Note the distinction between the client machine and guest machine. The client is the hardware from which you access a guest. The guest is the virtual desktop or virtual server which is accessed through the VM Portal or Administration Portal.

USB redirection Enabled mode allows KVM/SPICE USB redirection for Linux and Windows virtual machines. Virtual (guest) machines require no guest-installed agents or drivers for native USB. On Red Hat Enterprise Linux clients, all packages required for USB redirection are provided by the virt-viewer package. On Windows clients, you must also install the usbdk package. Enabled USB mode is supported on the following clients and guests:

If you have a 64-bit architecture PC, you must use the 64-bit version of Internet Explorer to install the 64-bit version of the USB driver. The USB redirection will not work if you install the 32-bit version on a 64-bit architecture. As long as you initially install the correct USB type, you can access USB redirection from both 32- and 64-bit browsers.

4.3.1. Using USB Devices on a Windows Client

The usbdk driver must be installed on the Windows client for the USB device to be redirected to the guest. Ensure the version of usbdk matches the architecture of the client machine. For example, the 64-bit version of usbdk must be installed on 64-bit Windows machines.

USB redirection is only supported when you open the virtual machine from the VM Portal.

Procedure

-

When the

usbdkdriver is installed, click → and select a virtual machine that is configured to use the SPICE protocol. - Click the Console tab.

- Select the USB enabled checkbox and click OK.

- Click → .

- Select the Enable USB Auto-Share check box and click OK.

- Start the virtual machine from the VM Portal and click Console to connect to that virtual machine.

- Plug your USB device into the client machine to make it appear automatically on the guest machine.

4.3.2. Using USB Devices on a Red Hat Enterprise Linux Client

The usbredir package enables USB redirection from Red Hat Enterprise Linux clients to virtual machines. usbredir is a dependency of the virt-viewer package, and is automatically installed together with that package.

USB redirection is only supported when you open the virtual machine from the VM Portal.

Procedure

- Click → .

- Select a virtual machine that has been configured to use the SPICE protocol and click Edit. This opens the Edit Virtual Machine window.

- Click the Console tab.

- Select the USB enabled checkbox and click OK.

- Click → .

- Select the Enable USB Auto-Share check box and click OK.

- Start the virtual machine from the VM Portal and click Console to connect to that virtual machine.

- Plug your USB device into the client machine to make it appear automatically on the guest machine.

4.4. Configuring Multiple Monitors

4.4.1. Configuring Multiple Displays for Red Hat Enterprise Linux Virtual Machines

A maximum of four displays can be configured for a single Red Hat Enterprise Linux virtual machine when connecting to the virtual machine using the SPICE protocol.

- Start a SPICE session with the virtual machine.

- Open the View drop-down menu at the top of the SPICE client window.

- Open the Display menu.

- Click the name of a display to enable or disable that display.

By default, Display 1 is the only display that is enabled on starting a SPICE session with a virtual machine. If no other displays are enabled, disabling this display will close the session.

4.4.2. Configuring Multiple Displays for Windows Virtual Machines

A maximum of four displays can be configured for a single Windows virtual machine when connecting to the virtual machine using the SPICE protocol.

- Click → and select a virtual machine.

- With the virtual machine in a powered-down state, click Edit.

- Click the Console tab.

-

Select the number of displays from the Monitors drop-down list.

This setting controls the maximum number of displays that can be enabled for the virtual machine. While the virtual machine is running, additional displays can be enabled up to this number.

- Click OK.

- Start a SPICE session with the virtual machine.

- Open the View drop-down menu at the top of the SPICE client window.

- Open the Display menu.

-

Click the name of a display to enable or disable that display.

By default, Display 1 is the only display that is enabled on starting a SPICE session with a virtual machine. If no other displays are enabled, disabling this display will close the session.

4.5. Configuring Console Options

4.5.1. Console Options

Connection protocols are the underlying technology used to provide graphical consoles for virtual machines and allow users to work with virtual machines in a similar way as they would with physical machines. Red Hat Virtualization currently supports the following connection protocols:

SPICE

Simple Protocol for Independent Computing Environments (SPICE) is the recommended connection protocol for both Linux virtual machines and Windows virtual machines. To open a console to a virtual machine using SPICE, use Remote Viewer.

VNC

Virtual Network Computing (VNC) can be used to open consoles to both Linux virtual machines and Windows virtual machines. To open a console to a virtual machine using VNC, use Remote Viewer or a VNC client.

RDP

Remote Desktop Protocol (RDP) can only be used to open consoles to Windows virtual machines, and is only available when you access a virtual machines from a Windows machine on which Remote Desktop has been installed. Before you can connect to a Windows virtual machine using RDP, you must set up remote sharing on the virtual machine and configure the firewall to allow remote desktop connections.

SPICE is not supported on virtual machines running Windows 8 or Windows 8.1. If a virtual machine running one of these operating systems is configured to use the SPICE protocol, it detects the absence of the required SPICE drivers and runs in VGA compatibility mode.

4.5.2. Accessing Console Options

You can configure several options for opening graphical consoles for virtual machines in the Administration Portal.

Procedure

- Click → and select a running virtual machine.

- Click → .

You can configure the connection protocols and video type in the Console tab of the Edit Virtual Machine window in the Administration Portal. Additional options specific to each of the connection protocols, such as the keyboard layout when using the VNC connection protocol, can be configured. See Virtual Machine Console settings explained for more information.

4.5.3. SPICE Console Options

When the SPICE connection protocol is selected, the following options are available in the Console Options window.

SPICE Options

-

Map control-alt-del shortcut to ctrl+alt+end: Select this check box to map the

Ctrl+Alt+Delkey combination toCtrl+Alt+Endinside the virtual machine. - Enable USB Auto-Share: Select this check box to automatically redirect USB devices to the virtual machine. If this option is not selected, USB devices will connect to the client machine instead of the guest virtual machine. To use the USB device on the guest machine, manually enable it in the SPICE client menu.

-

Open in Full Screen: Select this check box for the virtual machine console to automatically open in full screen when you connect to the virtual machine. Press

SHIFT+F11to toggle full screen mode on or off. - Enable SPICE Proxy: Select this check box to enable the SPICE proxy.

4.5.4. VNC Console Options

When the VNC connection protocol is selected, the following options are available in the Console Options window.

Console Invocation

- Native Client: When you connect to the console of the virtual machine, a file download dialog provides you with a file that opens a console to the virtual machine via Remote Viewer.

- noVNC: When you connect to the console of the virtual machine, a browser tab is opened that acts as the console.

VNC Options

-

Map control-alt-delete shortcut to ctrl+alt+end: Select this check box to map the

Ctrl+Alt+Delkey combination toCtrl+Alt+Endinside the virtual machine.

4.5.5. RDP Console Options

When the RDP connection protocol is selected, the following options are available in the Console Options window.

Console Invocation

- Auto: The Manager automatically selects the method for invoking the console.

- Native client: When you connect to the console of the virtual machine, a file download dialog provides you with a file that opens a console to the virtual machine via Remote Desktop.

RDP Options

- Use Local Drives: Select this check box to make the drives on the client machine accessible on the guest virtual machine.

4.5.6. Remote Viewer Options

4.5.6.1. Remote Viewer Options

When you specify the Native client console invocation option, you will connect to virtual machines using Remote Viewer. The Remote Viewer window provides a number of options for interacting with the virtual machine to which it is connected.

Table 4.1. Remote Viewer Options

| Option | Hotkey |

|---|---|

|

File |

|

|

View |

|

|

Send key |

|

|

Help |

The About entry displays the version details of Virtual Machine Viewer that you are using. |

|

Release Cursor from Virtual Machine |

|

4.5.6.2. Remote Viewer Hotkeys

You can access the hotkeys for a virtual machine in both full screen mode and windowed mode. If you are using full screen mode, you can display the menu containing the button for hotkeys by moving the mouse pointer to the middle of the top of the screen. If you are using windowed mode, you can access the hotkeys via the Send key menu on the virtual machine window title bar.

If vdagent is not running on the client machine, the mouse can become captured in a virtual machine window if it is used inside a virtual machine and the virtual machine is not in full screen. To unlock the mouse, press Shift + F12.

4.5.6.3. Manually Associating console.vv Files with Remote Viewer

If you are prompted to download a console.vv file when attempting to open a console to a virtual machine using the native client console option, and Remote Viewer is already installed, then you can manually associate console.vv files with Remote Viewer so that Remote Viewer can automatically use those files to open consoles.

Manually Associating console.vv Files with Remote Viewer

- Start the virtual machine.

-

Open the Console Options window:

- In the Administration Portal, click → .

- In the VM Portal, click the virtual machine name and click the pencil icon beside Console.

- Change the console invocation method to Native client and click OK.

- Attempt to open a console to the virtual machine, then click Save when prompted to open or save the console.vv file.

- Click the location on your local machine where you saved the file.

- Double-click the console.vv file and select Select a program from a list of installed programs when prompted.

- In the Open with window, select Always use the selected program to open this kind of file and click the Browse button.

- Click the C:Users_[user name]_AppDataLocalvirt-viewerbin directory and select remote-viewer.exe.

- Click Open and then click OK.

When you use the native client console invocation option to open a console to a virtual machine, Remote Viewer will automatically use the console.vv file that the Red Hat Virtualization Manager provides to open a console to that virtual machine without prompting you to select the application to use.

4.6. Configuring a Watchdog

4.6.1. Adding a Watchdog Card to a Virtual Machine

You can add a watchdog card to a virtual machine to monitor the operating system’s responsiveness.

Procedure

- Click → and select a virtual machine.

- Click Edit.

- Click the High Availability tab.

- Select the watchdog model to use from the Watchdog Model drop-down list.

- Select an action from the Watchdog Action drop-down list. This is the action that the virtual machine takes when the watchdog is triggered.

- Click OK.

4.6.2. Installing a Watchdog

To activate a watchdog card attached to a virtual machine, you must install the watchdog package on that virtual machine and start the watchdog service.

Installing Watchdogs

- Log in to the virtual machine on which the watchdog card is attached.

-

Install the

watchdogpackage and dependencies:# yum install watchdog

-

Edit the /etc/watchdog.conf file and uncomment the following line:

watchdog-device = /dev/watchdog

- Save the changes.

-

Start the

watchdogservice and ensure this service starts on boot:-

Red Hat Enterprise Linux 6:

# service watchdog start # chkconfig watchdog on

-

Red Hat Enterprise Linux 7:

# systemctl start watchdog.service # systemctl enable watchdog.service

-

4.6.3. Confirming Watchdog Functionality

Confirm that a watchdog card has been attached to a virtual machine and that the watchdog service is active.

This procedure is provided for testing the functionality of watchdogs only and must not be run on production machines.

Confirming Watchdog Functionality

- Log in to the virtual machine on which the watchdog card is attached.

-

Confirm that the watchdog card has been identified by the virtual machine:

# lspci | grep watchdog -i

-

Run one of the following commands to confirm that the watchdog is active:

-

Trigger a kernel panic:

# echo c > /proc/sysrq-trigger

-

Terminate the

watchdogservice:# kill -9

pgrep watchdog

-

The watchdog timer can no longer be reset, so the watchdog counter reaches zero after a short period of time. When the watchdog counter reaches zero, the action specified in the Watchdog Action drop-down menu for that virtual machine is performed.

4.6.4. Parameters for Watchdogs in watchdog.conf

The following is a list of options for configuring the watchdog service available in the /etc/watchdog.conf file. To configure an option, you must uncomment that option and restart the watchdog service after saving the changes.

For a more detailed explanation of options for configuring the watchdog service and using the watchdog command, see the watchdog man page.

Table 4.2. watchdog.conf variables

| Variable name | Default Value | Remarks |

|---|---|---|

|

|

N/A |

An IP address that the watchdog attempts to ping to verify whether that address is reachable. You can specify multiple IP addresses by adding additional |

|

|

N/A |

A network interface that the watchdog will monitor to verify the presence of network traffic. You can specify multiple network interfaces by adding additional |

|

|

|

A file on the local system that the watchdog will monitor for changes. You can specify multiple files by adding additional |

|

|

|

The number of watchdog intervals after which the watchdog checks for changes to files. A |

|

|

|

The maximum average load that the virtual machine can sustain over a one-minute period. If this average is exceeded, then the watchdog is triggered. A value of |

|

|

|

The maximum average load that the virtual machine can sustain over a five-minute period. If this average is exceeded, then the watchdog is triggered. A value of |

|

|

|

The maximum average load that the virtual machine can sustain over a fifteen-minute period. If this average is exceeded, then the watchdog is triggered. A value of |

|

|

|

The minimum amount of virtual memory that must remain free on the virtual machine. This value is measured in pages. A value of |

|

|

|

The path and file name of a binary file on the local system that will be run when the watchdog is triggered. If the specified file resolves the issues preventing the watchdog from resetting the watchdog counter, then the watchdog action is not triggered. |

|

|

N/A |

The path and file name of a binary file on the local system that the watchdog will attempt to run during each interval. A test binary allows you to specify a file for running user-defined tests. |

|

|

N/A |

The time limit, in seconds, for which user-defined tests can run. A value of |

|

|

N/A |

The path to and name of a device for checking the temperature of the machine on which the |

|

|

|

The maximum allowed temperature for the machine on which the |

|

|

|

The email address to which email notifications are sent. |

|

|

|

The interval, in seconds, between updates to the watchdog device. The watchdog device expects an update at least once every minute, and if there are no updates over a one-minute period, then the watchdog is triggered. This one-minute period is hard-coded into the drivers for the watchdog device, and cannot be configured. |

|

|

|

When verbose logging is enabled for the |

|

|

|

Specifies whether the watchdog is locked in memory. A value of |

|

|

|

The schedule priority when the value of |

|

|

|

The path and file name of a PID file that the watchdog monitors to see if the corresponding process is still active. If the corresponding process is not active, then the watchdog is triggered. |

4.7. Configuring Virtual NUMA

In the Administration Portal, you can configure virtual NUMA nodes on a virtual machine and pin them to physical NUMA nodes on one or more hosts. The host’s default policy is to schedule and run virtual machines on any available resources on the host. As a result, the resources backing a large virtual machine that cannot fit within a single host socket could be spread out across multiple NUMA nodes. Over time these resources may be moved around, leading to poor and unpredictable performance. Configure and pin virtual NUMA nodes to avoid this outcome and improve performance.

Configuring virtual NUMA requires a NUMA-enabled host. To confirm whether NUMA is enabled on a host, log in to the host and run numactl --hardware. The output of this command should show at least two NUMA nodes. You can also view the host’s NUMA topology in the Administration Portal by selecting the host from the Hosts tab and clicking NUMA Support. This button is only available when the selected host has at least two NUMA nodes.

If you define NUMA Pinning, the default migration mode is Allow manual migration only by default.

Configuring Virtual NUMA

- Click → and select a virtual machine.

- Click Edit.

- Click Show Advanced Options.

- Click the Host tab.

- Select the Specific Host(s) radio button and select the host(s) from the list. The selected host(s) must have at least two NUMA nodes.

- Click NUMA Pinning.

- In the NUMA Topology window, click and drag virtual NUMA nodes from the box on the right to host NUMA nodes on the left as required, and click OK.

-

Select Strict, Preferred, or Interleave from the Tune Mode drop-down list in each NUMA node. If the selected mode is Preferred, the NUMA Node Count must be set to

1. -

You can also set the NUMA pinning policy automatically by selecting Resize and Pin NUMA from the CPU Pinning Polcy drop-down list under the CPU Allocation settings in the Resource Allocation tab:

-

None— Runs without any CPU pinning. -

Manual— Runs a manually specified virtual CPU on a specific physical CPU and a specific host. Available only when the virtual machine is pinned to a Host. -

Resize and Pin NUMA— Resizes the virtual CPU and NUMA topology of the virtual machine according to the Host, and pins them to the Host resources. -

Dedicated— Exclusively pins virtual CPUs to host physical CPUs. Available for cluster compatibility level 4.7 or later. If the virtual machine has NUMA enabled, all nodes must be unpinned. -

Isolate Threads— Exclusively pins virtual CPUs to host physical CPUs. Each virtual CPU gets a physical core. Available for cluster compatibility level 4.7 or later. If the virtual machine has NUMA enabled, all nodes must be unpinned.

-

- Click OK.

If you do not pin the virtual NUMA node to a host NUMA node, the system defaults to the NUMA node that contains the host device’s memory-mapped I/O (MMIO), provided that there are one or more host devices and all of those devices are from a single NUMA node.

4.8. Configuring Satellite errata viewing for a virtual machine

In the Administration Portal, you can configure a virtual machine to display the available errata. The virtual machine needs to be associated with a Red Hat Satellite server to show available errata.

Red Hat Virtualization 4.4 supports viewing errata with Red Hat Satellite 6.6.

Prerequisites

- The Satellite server must be added as an external provider.

-

The Manager and any virtual machines on which you want to view errata must all be registered in the Satellite server by their respective FQDNs. This ensures that external content host IDs do not need to be maintained in Red Hat Virtualization.

Virtual machines added using an IP address cannot report errata.

- The host that the virtual machine runs on also needs to be configured to receive errata information from Satellite.

-

The virtual machine must have the

ovirt-guest-agentpackage installed. This package enables the virtual machine to report its host name to the Red Hat Virtualization Manager, which enables the Red Hat Satellite server to identify the virtual machine as a content host and report the applicable errata. - The virtual machine must be registered to the Red Hat Satellite server as a content host.

- Use Red Hat Satellite remote execution to manage packages on hosts.

The Katello agent is deprecated and will be removed in a future Satellite version. Migrate your processes to use the remote execution feature to update clients remotely.

Procedure

- Click → and select a virtual machine.

- Click Edit.

- Click the Foreman/Satellite tab.

- Select the required Satellite server from the Provider drop-down list.

- Click OK.

Additional resources

- Setting up Satellite errata viewing for a host in the Administration Guide

- Installing the Guest Agents, Tools, and Drivers on Linux in the Virtual Machine Management Guide for Red Hat Enterprise Linux virtual machines.

- Installing the Guest Agents, Tools, and Drivers on Windows in the Virtual Machine Management Guide for Windows virtual machines.

4.9. Configuring Headless Virtual Machines

You can configure a headless virtual machine when it is not necessary to access the machine via a graphical console. This headless machine will run without graphical and video devices. This can be useful in situations where the host has limited resources, or to comply with virtual machine usage requirements such as real-time virtual machines.

Headless virtual machines can be administered via a Serial Console, SSH, or any other service for command line access. Headless mode is applied via the Console tab when creating or editing virtual machines and machine pools, and when editing templates. It is also available when creating or editing instance types.

If you are creating a new headless virtual machine, you can use the Run Once window to access the virtual machine via a graphical console for the first run only. See Virtual Machine Run Once settings explained for more details.

Prerequisites

- If you are editing an existing virtual machine, and the Red Hat Virtualization guest agent has not been installed, note the machine’s IP prior to selecting Headless Mode.

-

Before running a virtual machine in headless mode, the GRUB configuration for this machine must be set to console mode otherwise the guest operating system’s boot process will hang. To set console mode, comment out the spashimage flag in the GRUB menu configuration file:

#splashimage=(hd0,0)/grub/splash.xpm.gz serial --unit=0 --speed=9600 --parity=no --stop=1 terminal --timeout=2 serial

Restart the virtual machine if it is running when selecting the Headless Mode option.

Configuring a Headless Virtual Machine

- Click → and select a virtual machine.

- Click Edit.

- Click the Console tab.

- Select Headless Mode. All other fields in the Graphical Console section are disabled.

- Optionally, select Enable VirtIO serial console to enable communicating with the virtual machine via serial console. This is highly recommended.

- Reboot the virtual machine if it is running. See Rebooting a Virtual Machine.

4.10. Configuring High Performance Virtual Machines, Templates, and Pools

You can configure a virtual machine for high performance, so that it runs with performance metrics as close to bare metal as possible. When you choose high performance optimization, the virtual machine is configured with a set of automatic, and recommended manual, settings for maximum efficiency.

The high performance option is only accessible in the Administration Portal, by selecting High Performance from the Optimized for dropdown list in the Edit or New virtual machine, template, or pool window. This option is not available in the VM Portal.

The high performance option is supported by Red Hat Virtualization 4.2 and later. It is not available for earlier versions.

Virtual Machines

If you change the optimization mode of a running virtual machine to high performance, some configuration changes require restarting the virtual machine.

To change the optimization mode of a new or existing virtual machine to high performance, you may need to make manual changes to the cluster and to the pinned host configuration first.

A high performance virtual machine has certain limitations, because enhanced performance has a trade-off in decreased flexibility:

- If pinning is set for CPU threads, I/O threads, emulator threads, or NUMA nodes, according to the recommended settings, only a subset of cluster hosts can be assigned to the high performance virtual machine.

- Many devices are automatically disabled, which limits the virtual machine’s usability.

Templates and Pools

High performance templates and pools are created and edited in the same way as virtual machines. If a high performance template or pool is used to create new virtual machines, those virtual machines inherits this property and its configurations. Certain settings, however, are not inherited and must be set manually:

- CPU pinning

- Virtual NUMA and NUMA pinning topology

- I/O and emulator threads pinning topology

- Pass-through Host CPU

4.10.1. Creating a High Performance Virtual Machine, Template, or Pool

To create a high performance virtual machine, template, or pool:

-

In the New or Edit window, select High Performance from the Optimized for drop-down menu.

Selecting this option automatically performs certain configuration changes to this virtual machine, which you can view by clicking different tabs. You can change them back to their original settings or override them. (See Automatic High Performance Configuration Settings for details.) If you change a setting, its latest value is saved.

-

Click OK.

If you have not set any manual configurations, the High Performance Virtual Machine/Pool Settings screen describing the recommended manual configurations appears.

If you have set some of the manual configurations, the High Performance Virtual Machine/Pool Settings screen displays the settings you have not made.

If you have set all the recommended manual configurations, the High Performance Virtual Machine/Pool Settings screen does not appear.

-

If the High Performance Virtual Machine/Pool Settings screen appears, click Cancel to return to the New or Edit window to perform the manual configurations. See Configuring the Recommended Manual Settings for details.

Alternatively, click OK to ignore the recommendations. The result may be a drop in the level of performance.

-

Click OK.

You can view the optimization type in the General tab of the details view of the virtual machine, pool, or template.

Certain configurations can override the high performance settings. For example, if you select an instance type for a virtual machine before selecting High Performance from the Optimized for drop-down menu and performing the manual configuration, the instance type configuration will not affect the high performance configuration. If, however, you select the instance type after the high performance configurations, you should verify the final configuration in the different tabs to ensure that the high performance configurations have not been overridden by the instance type.

The last-saved configuration usually takes priority.

Support for instance types is now deprecated, and will be removed in a future release.

4.10.1.1. Automatic High Performance Configuration Settings

The following table summarizes the automatic settings. The Enabled (Y/N) column indicates configurations that are enabled or disabled. The Applies to column indicates the relevant resources:

- VM — Virtual machine

- T — Template

- P — Pool

- C — Cluster

Table 4.3. Automatic High Performance Configuration Settings

| Setting | Enabled (Y/N) | Applies to |

|---|---|---|

|

Headless Mode (Console tab) |

|

|

|

USB Enabled (Console tab) |

|

|

|

Smartcard Enabled (Console tab) |

|

|

|

Soundcard Enabled (Console tab) |

|

|

|

Enable VirtIO serial console (Console tab) |

|

|

|

Allow manual migration only (Host tab) |

|

|

|

Pass-Through Host CPU (Host tab) |

|

|

|

Highly Available [1] (High Availability tab) |

|

|

|

No-Watchdog (High Availability tab) |

|

|

|

Memory Balloon Device (Resource Allocation tab) |

|

|

|

I/O Threads Enabled [2] (Resource Allocation tab) |

|

|

|

Paravirtualized Random Number Generator PCI (virtio-rng) device (Random Generator tab) |

|

|

|

I/O and emulator threads pinning topology |

|

|

|

CPU cache layer 3 |

|

|

-

Highly Availableis not automatically enabled. If you select it manually, high availability should be enabled for pinned hosts only. - Number of I/O threads = 1.

4.10.1.2. I/O and Emulator Threads Pinning Topology (Automatic Settings)

The I/O and emulator threads pinning topology is a new configuration setting for Red Hat Virtualization 4.2. It requires that I/O threads, NUMA nodes, and NUMA pinning be enabled and set for the virtual machine. Otherwise, a warning will appear in the engine log.

Pinning topology:

- The first two CPUs of each NUMA node are pinned.

-

If all vCPUs fit into one NUMA node of the host:

- The first two vCPUs are automatically reserved/pinned

- The remaining vCPUs are available for manual vCPU pinning

-

If the virtual machine spans more than one NUMA node:

- The first two CPUs of the NUMA node with the most pins are reserved/pinned

- The remaining pinned NUMA node(s) are for vCPU pinning only

Pools do not support I/O and emulator threads pinning.